Find and fix non-searchable PDFs

I use a ScanSnap ix500 scanner to scan a lot of paper into PDFs on my iMac. And thanks to the ScanSnap's bundled optical character recognition (OCR), all of those scans are searchable via Spotlight. While the OCR may not be perfect, it's generally more than good enough to find what I'm looking for.

However, I noticed that I had a number of PDFs that weren't searchable—some electronic statements from credit cards and utility companies, and some older documents that predated my purchase of the ScanSnap, at least based on some tests with Spotlight.

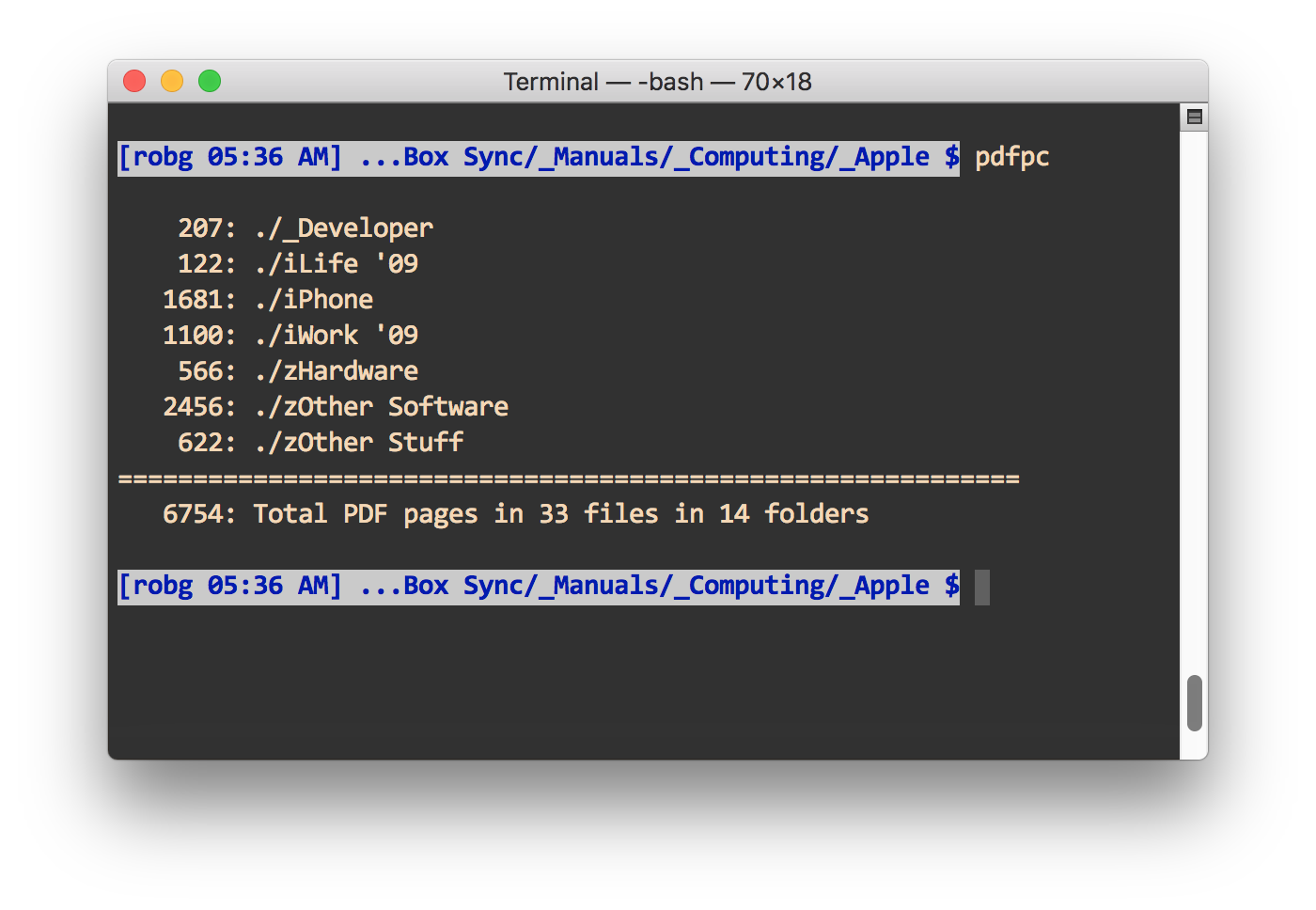

But I wanted to know how many such PDFs I had, so I could run OCR on all of them, via the excellent PDFPen Pro app. (The Fujitsu's software will only perform OCR on documents it scanned.) The question was how to find all such files, and then once found, how to most easily run them through PDFPen Pro's OCR process.

In the end, I needed to install one set of Unix tools, and then write two small scripts—one shell script and one AppleScript. Of course, you'll also need PDFPen (I don't think Pro is required), or some other app that can perform OCR on PDF files.

It's also a very simple-minded script, as it does just one thing: it copies my public IP address to the clipboard and shows it in a pop-up message, as seen at right. OK, so that's two things, but they're very closely related.

It's also a very simple-minded script, as it does just one thing: it copies my public IP address to the clipboard and shows it in a pop-up message, as seen at right. OK, so that's two things, but they're very closely related.